Hi!

I am Zihao Xu (徐 子昊), a fourth-year PhD candidate in the Computer Science program at Rutgers University. My advisor is Professor Hao Wang, and my research interests span Large Language Models (LLMs), Domain Adaptation, Recommendation Systems, and Bayesian Deep Learning. I received my undergraduate degree in Computer Science from Shanghai Jiaotong University, a top-ranked university in China. During my time there, I was also a member of the ACM Honors Class, a prestigious program for the top 5% of Computer Science students at the university. Notable alumni of the program include Tianqi Chen (Assistant Professor at Carnegie Mellon University), Mu Li (former Senior Principal Scientist at Amazon), and Bo Li (Assistant Professor at Harvard Medical School).

News

- Feb. 25th, 2025: Our paper: Implicit In-context Learning is accepted by ICLR 2025. Code is released.

- Nov 25th, 2024: Our paper: Continual Learning of Large Language Models: A Comprehensive Survey is accepted by ACM Computing Surveys, 2024.

- Sep. 27th, 2024: Our paper: Towards a Generalized Bayesian Model of Reconstructive Memory: A Generalized Model of Reconstructive Memory is accepted by Computational Brain & Behavior.

- Jun. 30th, 2023: Our paper: Taxonomy-Structured Domain Adaptation is accepted by ICML 2023. Code is released.

- Jan. 21th, 2023: Our paper: Domain-Indexing Variational Bayes: Interpretable Domain Index for Domain Adaptation is accepted by ICLR 2023 (spotlight). See our code and openreview page for more details.

- Feb. 8th, 2022: Our paper: Graph-Relational Domain Adaptation is accepted by ICLR 2022. Code is released here.

Selected Publications

“*” indicates equal contribution.

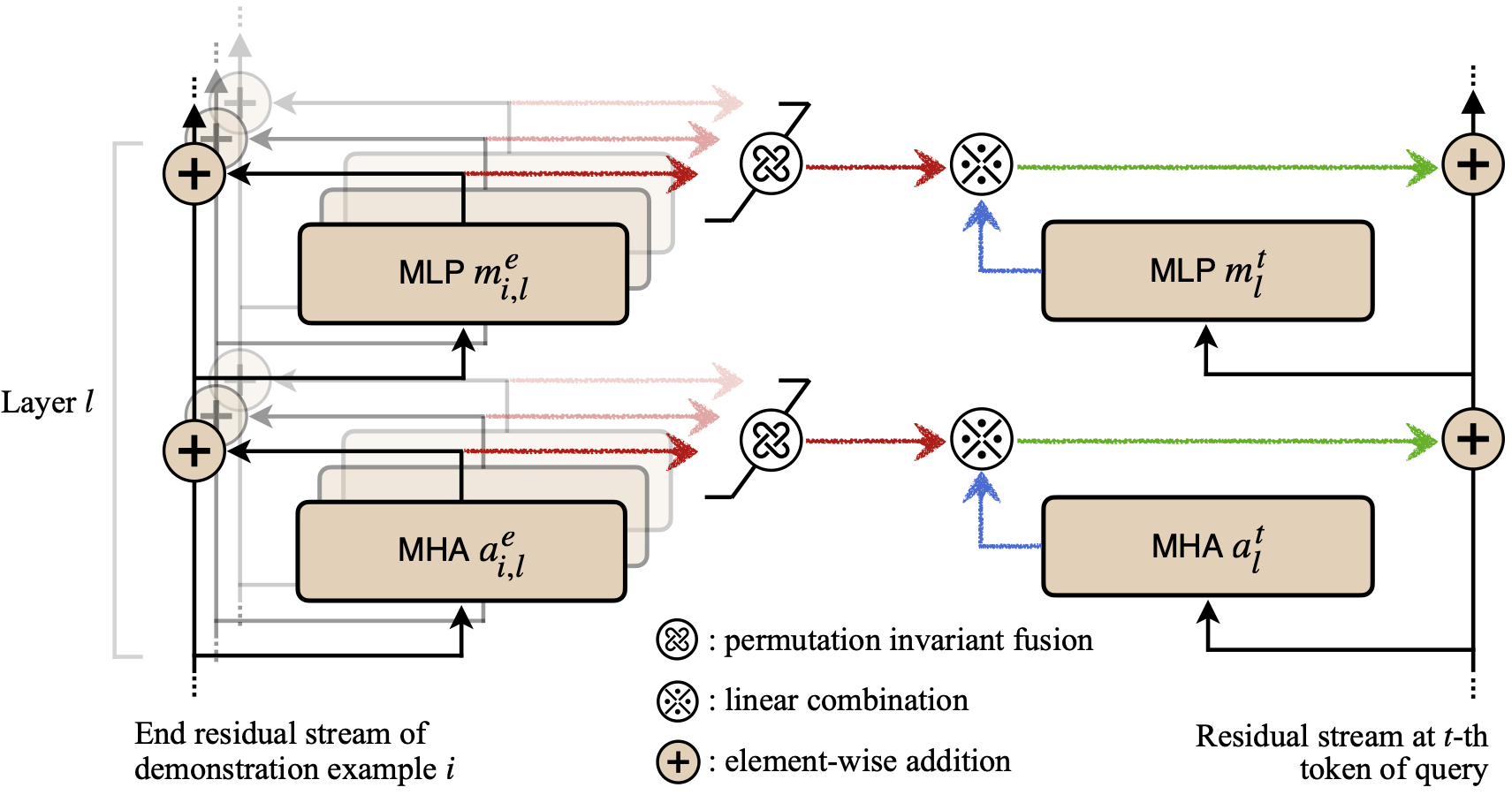

Implicit In-context Learning

Zhuowei Li, Zihao Xu, Ligong Han, Yunhe Gao, Song Wen, Di Liu, Hao Wang, Dimitris N. Metaxas

International Conference on Learning Representations (ICLR) 2025

[paper] [code (and data)]

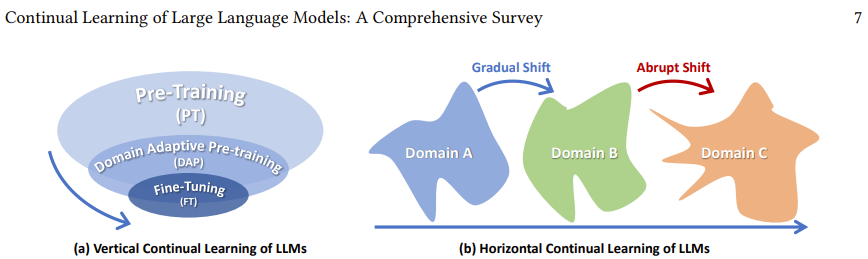

Continual Learning of Large Language Models: A Comprehensive Survey

Haizhou Shi, Zihao Xu, Hengyi Wang, Weiyi Qin, Wenyuan Wang, Yibin Wang, Hao Wang

ACM Computing Surveys, 2024

[paper]

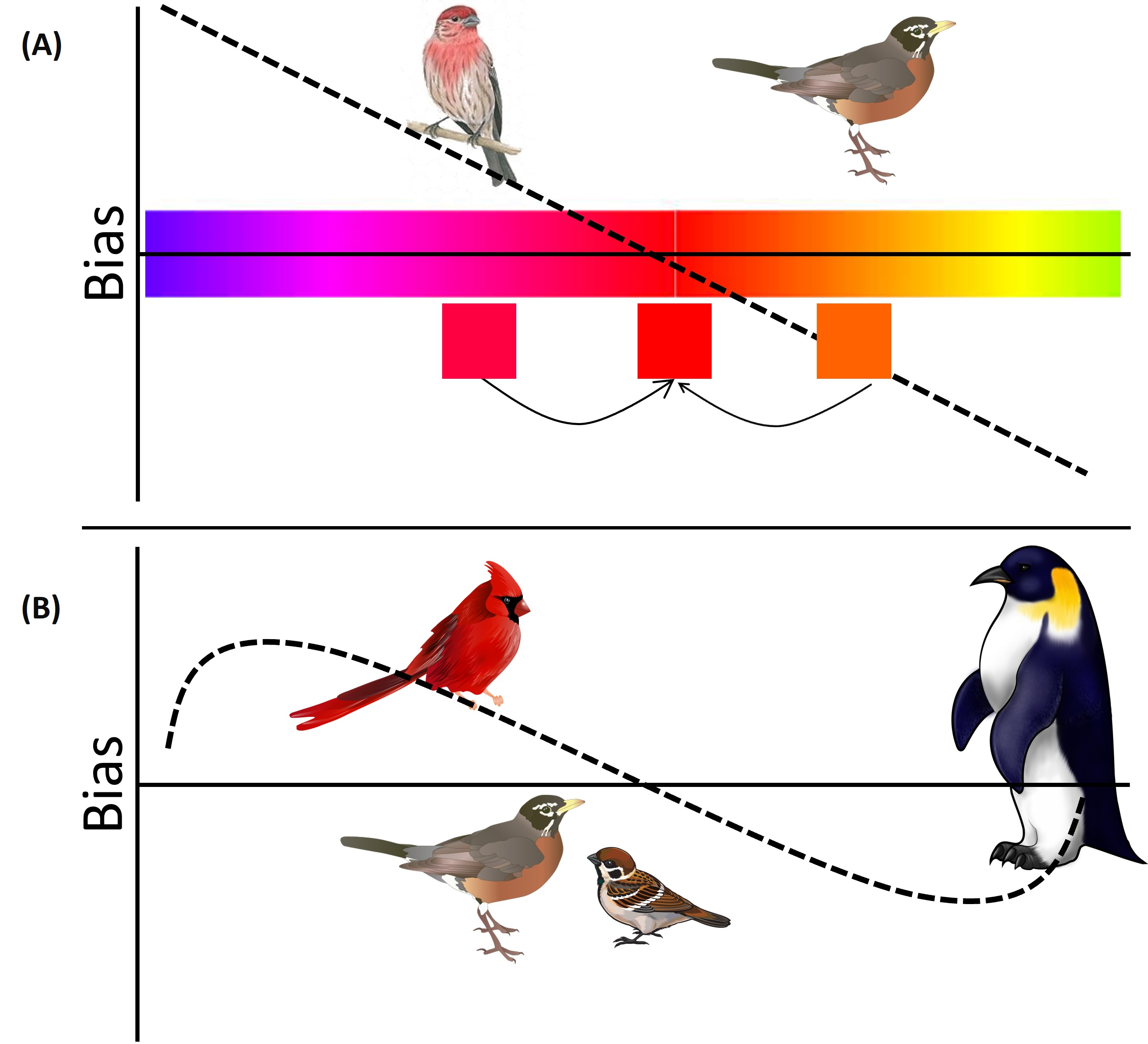

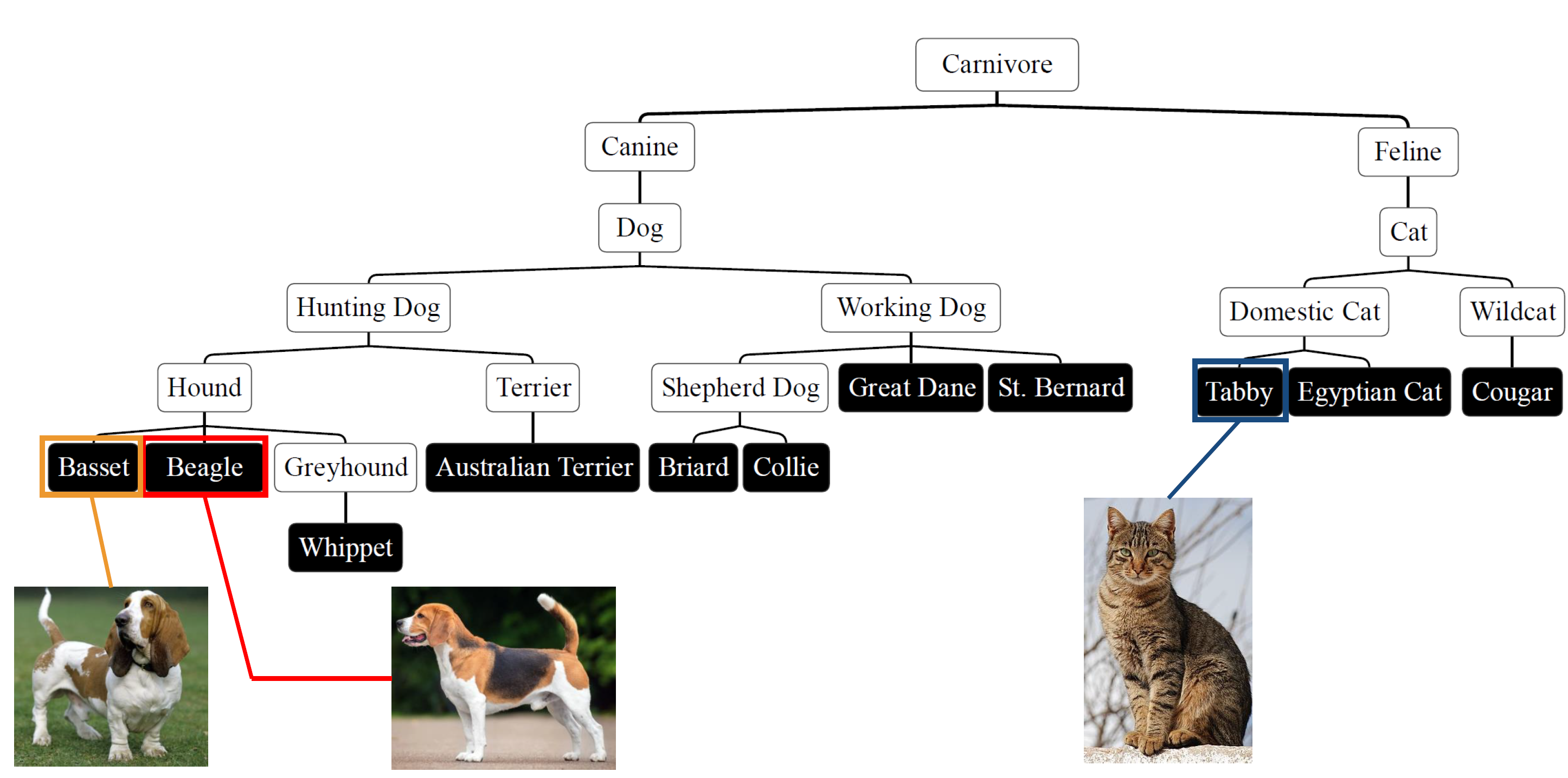

Taxonomy-Structured Domain Adaptation

Tianyi Liu* , Zihao Xu*, Hao He, Guang-Yuan Hao, Hao Wang

International Conference on Machine Learning (ICML) 2023

[paper] [code (and data)] [talk] [slides]

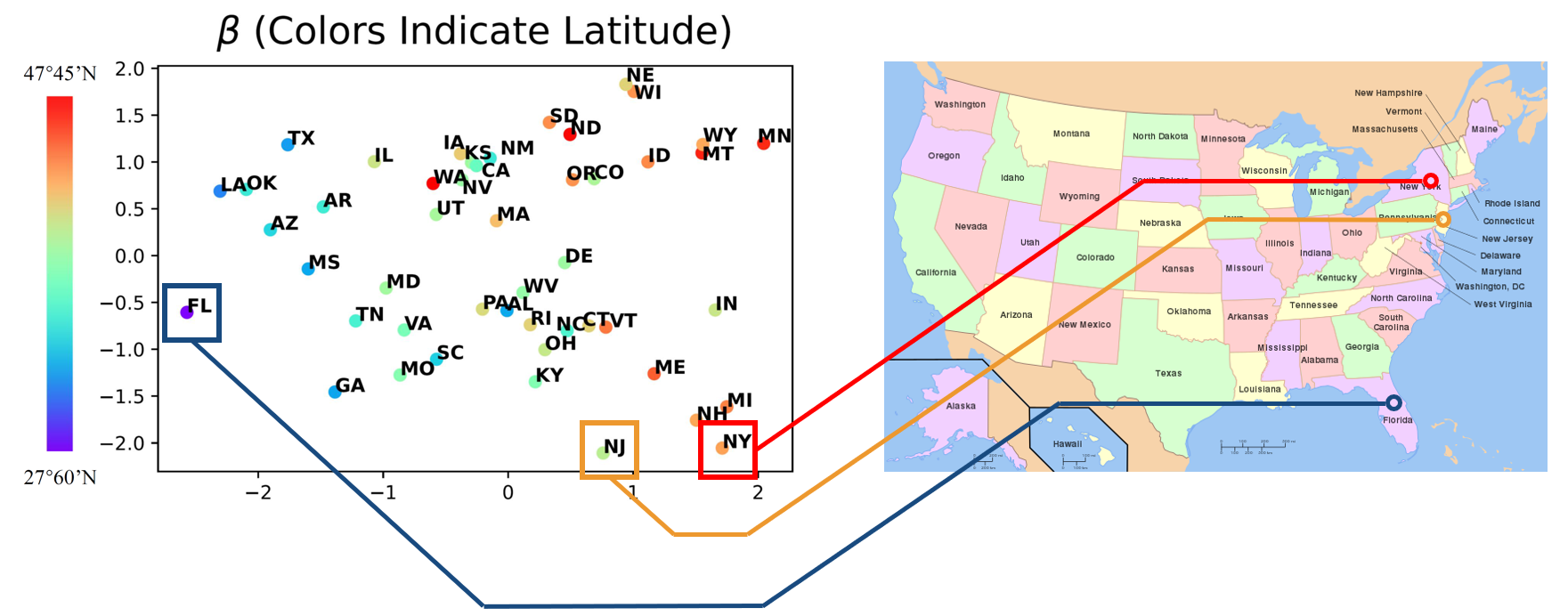

Domain-Indexing Variational Bayes for Domain Adaptation

Zihao Xu* , Guang-Yuan Hao*, Hao He, Hao Wang.

(Spotlight) International Conference on Learning Representations (ICLR) 2023

[paper] [code (and data)] [talk] [slides]

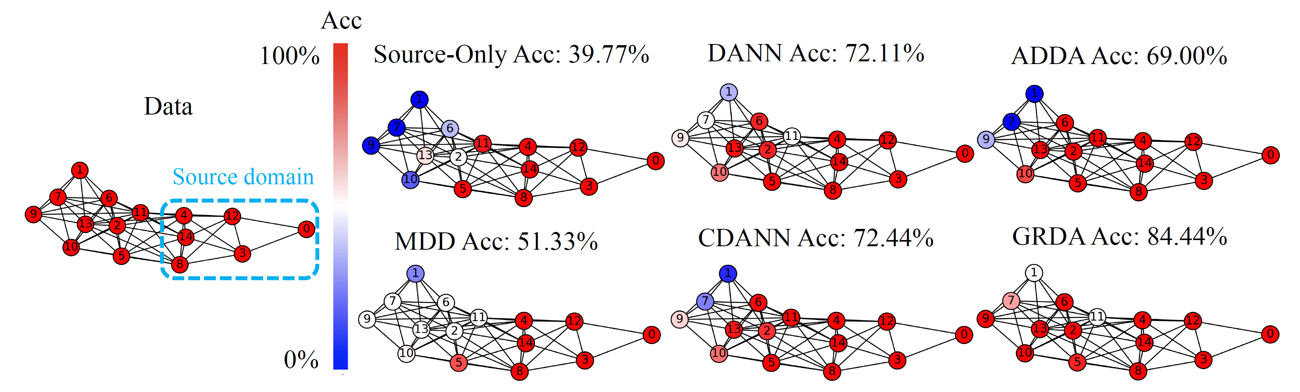

Graph-Relational Domain Adaptation

Zihao Xu, Hao he, Guang-He Lee, Yuyang Wang, Hao Wang

International Conference on Learning Representations (ICLR) 2022

[paper] [code (and data)] [talk] [slides] [website]